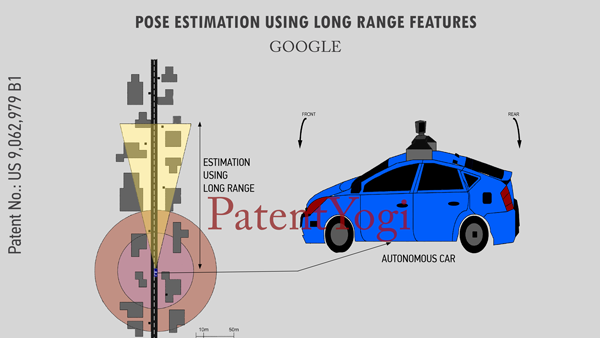

The autonomous vehicles need to be able to accurately estimate their relative geographic location in the world. To do this, autonomous vehicles typically combine inertial pose estimation systems (e.g., using gyros, accelerometers, wheel encoders, GPS) and map-based localization approaches (e.g. generating a local map using onboard sensors and comparing these to a stored map to estimate where the vehicle is in the stored map). These approaches may result in noise or uncertainty in both the inertial pose estimation and localization stages that makes it difficult to achieve very high accuracy results. In addition, these approaches tend to focus on near range information only.

Google’s new patent discloses a technique to use an object detected at long range to increase the accuracy of a location and heading estimate based on near range information. For example, an autonomous vehicle may use data points collected from a sensor such as a laser to generate an environmental map of environmental features. The environmental map is then compared to pre-stored map data to determine the vehicle’s geographic location and heading. A second sensor, such as a laser or camera, having a longer range than the first sensor may detect an object outside of the range and field of view of the first sensor. For example, the object may have retroreflective properties which make it identifiable in a camera image or from laser data points. The location of the object is then compared to the pre-stored map data and used to refine the vehicle’s estimated location and heading.

Patent Number : 9,062,979

Patent Title : Pose estimation using long range features

Inventors : Ferguson; David I. (San Francisco, CA), Silver; David (Santa Clara, CA)

Assignee : Google Inc. (Mountain View, CA)

Family ID : 1000000376626

Appl. No. : 13/936,522

Filed : July 8, 2013

Abstract

Aspects of the present disclosure relate to using an object detected at long range to increase the accuracy of a location and heading estimate based on near range information. For example, an autonomous vehicle may use data points collected from a sensor such as a laser to generate an environmental map of environmental features. The environmental map is then compared to pre-stored map data to determine the vehicle’s geographic location and heading. A second sensor, such as a laser or camera, having a longer range than the first sensor may detect an object outside of the range and field of view of the first sensor. For example, the object may have retroreflective properties which make it identifiable in a camera image or from laser data points. The location of the object is then compared to the pre-stored map data and used to refine the vehicle’s estimated location and heading.