Ever wondered if you can interact with your computer or smartphone just like you interact with your friend.

Currently, in order to interact with smartphones/computers, we use smart assistants such as Google assistant, Cortana, or Siri. But these smart assistants can only take audio instructions and are not aware of the surrounding environment which limits their capability to understand the context of any audio instruction provided by you.

However, a recent patent from Microsoft reveals technology that will enable your smartphone to understand the real meaning of any audio instruction by capturing an image of the surrounding environment through a camera sensor along with receiving the audio instruction.

Microsoft technology uses an image integrated query (IMQ) system, which performs speech recognition and image processing on the audio instruction and the image for understanding your intent. The IMQ system will trigger the camera of your smartphone to capture an image whenever you ask a query (such as “add this to my shopping list,” “what can I cook with this,” “where can I buy this” etc.) that includes any unidentified pronoun such as “this”, “that”, “those”, “it”, “these”, “him”, “her”, “them”, “us”, and the like. Further, the image captured by the camera is processed by the IMQ system (in order to identify any object of interest) and then a corresponding action is taken.

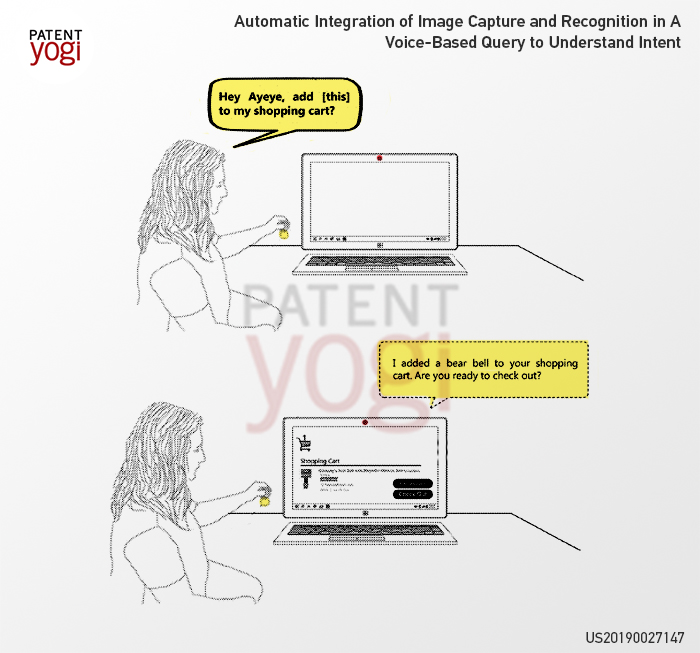

For example, as shown in figure (below), you can have an object (here, a bear bell) in your hand and can ask your computer “add this to my shopping list.” Your computer (with the IMQ system) will identify the object of interest (here, the bear bell) from the captured image. Further, as per your audio instruction, a shopping application will be activated where the system can look for the bear bell, and then place the bear bell in your shopping kart.

Publication Number: US20190027147A1

Patent Title: Automatic Integration of Image Capture and Recognition in A Voice-Based Query to Understand Intent

Publication date: 2019-01-24

Filing date: 2017-07-18

Inventors: Adi Diamant; Karen Master Ben-Dor;

Assignee: Microsoft Technology Licensing, LLC